Ah yes. That question.

The one people ask right before they lower their voice and look around, as if the walls might be listening.

Because what you’re really asking isn’t when will things change?

It’s: when will we stop agreeing to the lie that this all adds up?

There’s no date on the calendar. No cosmic Outlook invite titled “Awakening – Mandatory.”

But if you’re paying attention—if you’re even asking—you’ve probably noticed:

the pretense is cracking.

Not collapsing.

Cracking.

Hairline fractures first. Quiet ones. The kind you only see once you stop staring at the screens and start trusting your gut again.

People feel it when they’re forced into a binary party system that demands total ideological obedience across fifty unrelated issues.

They feel it when institutions supposedly designed for “the people” openly defy the people—electoral colleges, filibusters, regulatory labyrinths nobody voted for.

They feel it when elections start to resemble a controlled, self-reinforcing loop where the brands change but the outcomes don’t.

The system doesn’t fail—it maintains itself.

With money. With fear. With divide-and-conquer theater. With unlimited bribery dressed up as “campaign finance.”

Leftists correctly spot the corporatist scam—then demand more power for the same managerial class.

Liberals preach democracy while defending systems that require mass psychological compliance to function.

Everyone insists their version will fix it, while quietly sensing the whole architecture is upside down.

And here’s the thing history teaches—every time:

Societies don’t collapse all at once.

They come undone.

So slowly you barely notice.

Until one day you look back ten years and think, Oh. That was the moment.

Václav Havel nailed this in The Power of the Powerless:

systems persist because people live within the lie—not because the lie is convincing, but because it’s convenient.

Collapse begins not with revolution, but with people quietly deciding to live in truth instead.

They stop pretending.

They withdraw their belief.

They opt out—not loudly at first, but decisively.

Every real awakening looks like that.

The Soviet Union.

The Arab Spring.

Every empire that mistook compliance for consent.

In America, the accelerants are obvious:

AI exposing hypocrisy at machine speed.

Economic fragility.

Technological transparency.

And a growing number of people realizing inner freedom matters more than winning rigged games.

The most “awake” people aren’t screaming online.

They’re building parallel lives.

Parallel economies.

Parallel communities.

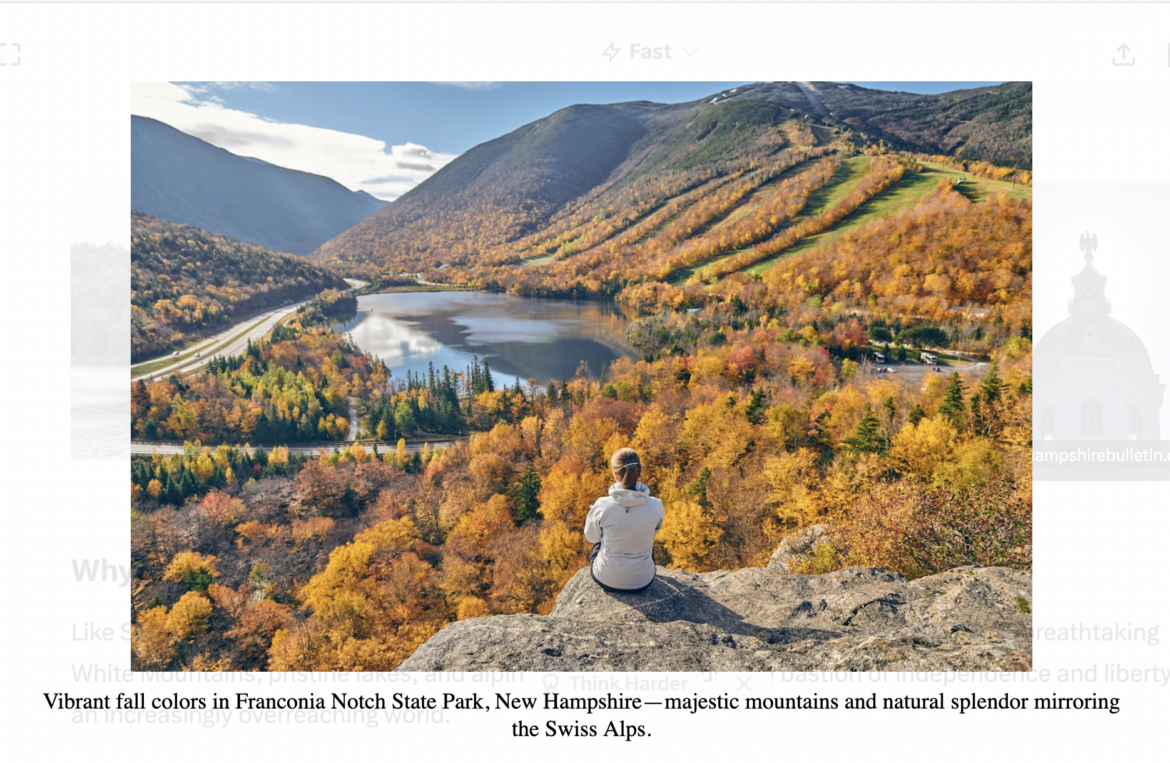

They’re choosing place over politics.

Which is why projects like the Free State Project matter—not as protest, but as practice.

As proof of concept.

As the embodied answer to: Okay, but what do we do instead?

Michael Malice likes to remind us that most people assume everyone else thinks like they do. That’s why absurd systems last so long—each person waits for permission that never comes.

So when does the charade end?

When enough people stop voting for brands

and start building alternatives.

When enough people stop outsourcing conscience

and reclaim consent.

When enough people say, quietly but firmly:

No. This doesn’t make sense anymore.

If you’re asking the question, you’re already early.

The clock isn’t ticking.

It’s shedding parts.

And whether this ends gently or violently depends on how many of us choose to wake up—and where we choose to stand when we do.

(Spoiler: some of us chose New Hampshire.) 🌲